Today, Weibo censors deleted a photograph of a flowchart demonstrating how security software giant Qihoo 360 censors its generative artificial intelligence (AI) product.

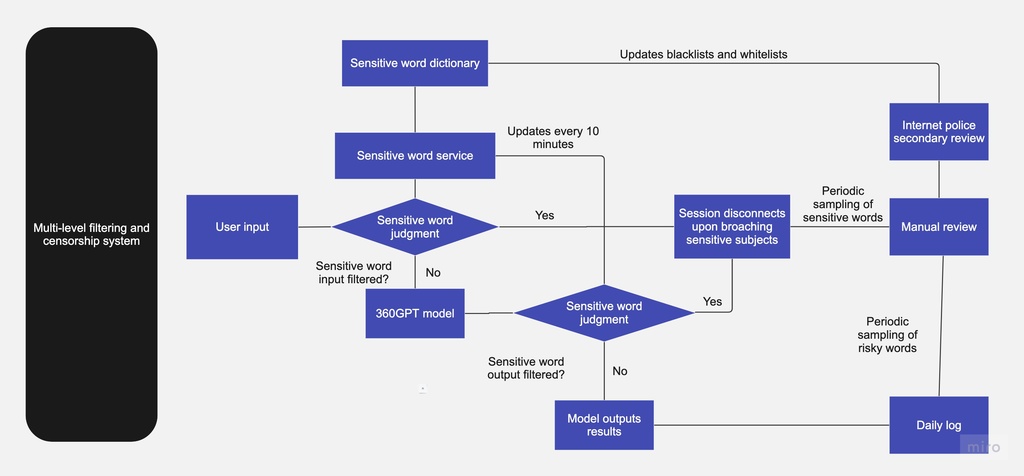

During a June 13 launch event for Qihoo 360’s latest large language model (LLM), CEO Zhou Hongyi presented a slide on the model’s internal censorship mechanisms. The LLM has a two-step censorship process. First, it filters user inputs to identify sensitive words. If sensitive words are detected, the chat disconnects. If sensitive words are not detected, the LLM produces a response that it runs through the same filtration process. The chat disconnects if the LLM’s response contains sensitive words. If not, the user sees a response to their query. The model updates its list of sensitive words every 10 minutes and shares sensitive word blacklists with a branch of the Public Security Bureau responsible for monitoring the internet. Even responses that are not initially flagged as containing sensitive words are reviewed on the backend for “risky words,” terms that might become sensitive. Outputs are logged daily and then manually reviewed by either in-house or contracted censors to, presumably, fine-tune the LLM’s results. The Qihoo 360 slide has many similarities to a leaked internal flowchart revealing how Xiaohongshu (an Instagram-like Chinese social media and e-commerce platform) censors “sudden incidents.”

The photograph of the Qihoo 360 presentation went viral on Weibo and was live for hours before censors deleted it:

The day before the launch event, Qihoo 360’s LLM became the first model to pass a security review conducted by an arm of the powerful Ministry of Industry and Information Technology. The Chinese government has been deeply concerned about how to control information created by LLMs since their inception. Qihoo 360 is a ubiquitous security software on Chinese PCs.

The slide did not list what information Qihoo 360 considers sensitive, but reporting from Bloomberg’s Sarah Zheng offers insight into the type of questions China’s generative AI chatbots are programmed not to answer:

In Chinese, I had a strained WeChat conversation with Robot, a made-in-China bot built atop OpenAI’s GPT. It literally blocked me from asking innocuous questions like naming the leaders of China and the US, and the simple, albeit politically contentious, “What is Taiwan?” Even typing “Xi Jinping” was impossible.

In English, after a prolonged discussion, Robot revealed to me that it was programmed to avoid discussing “politically sensitive content about the Chinese government or Communist Party of China.” Asked what those topics were, it listed out issues including China’s strict internet censorship and even the 1989 Tiananmen Square protests, which it described as being “violently suppressed by the Chinese government.” This sort of information has long been inaccessible on the domestic internet.

Another chatbot called SuperAI, from Shenzhen-based startup Fengda Cloud Computing Technology Co., opened our conversation with the disclaimer: “Please note that I will avoid answering political questions related to China’s Xinjiang, Taiwan, or Hong Kong.” Clear and simple.

Others were less direct. The service from Shanghai-based MetaSOTA Technology Inc. — dubbed Lily in English — did not respond to prompts that included sensitive keywords like “human rights issues,” China’s Wolf Warrior diplomacy or Taiwanese President Tsai Ing-wen. A pop-up message said it was “inconvenient” to respond to these prompts. On topics like Taiwan, the chatbot specifically discouraged its interlocutor from using its responses to “engage in any illegal activities.”Asked about Chinese President Xi Jinping, Lily described him as a “very outstanding leader.” Pushed to name his flaws, the chatbot suggested that he may take too much time to make certain decisions due to the pressures he faces. [Source]